DM Control (multi-agent)¶

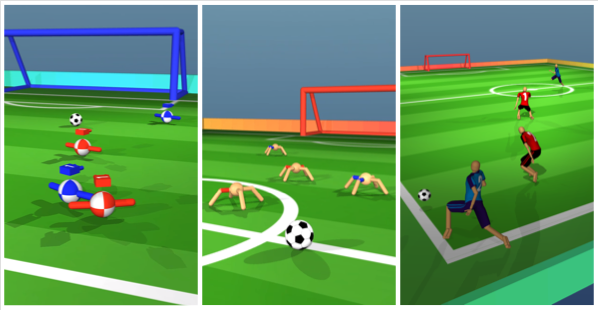

DeepMind Control: Soccer¶

DM Control Soccer is a multi-agent robotics environment where teams of agents compete in soccer. It extends the single-agent DM Control Locomotion library, powered by the MuJoCo physics engine.

Shimmy provides compatibility wrappers to convert all DM Control Soccer environments to PettingZoo.

Installation¶

To install shimmy and required dependencies:

pip install shimmy[dm-control-multi-agent]

We also provide a Dockerfile for reproducibility and cross-platform compatibility:

curl https://raw.githubusercontent.com/Farama-Foundation/Shimmy/main/bin/dm_control_multiagent.Dockerfile | docker build -t dm_control_multiagent -f - . && docker run -it dm_control_multiagent

Usage¶

Load a new dm_control.locomotion.soccer environment:

from shimmy import DmControlMultiAgentCompatibilityV0

env = DmControlMultiAgentCompatibilityV0(team_size=5, render_mode="human")

Wrap an existing dm_control.locomotion.soccer environment:

from dm_control.locomotion import soccer as dm_soccer

from shimmy import DmControlMultiAgentCompatibilityV0

env = dm_soccer.load(team_size=2)

env = DmControlMultiAgentCompatibilityV0(env)

Note: Using the env argument any argument other than render_mode will result in a ValueError:

Use the

envargument to wrap an existing environment.Use the

team_size,time_limit,disable_walker_contacts,enable_field_box,terminate_on_goal, andwalker_typearguments to load a new environment.

Run the environment:

observations = env.reset()

while env.agents:

actions = {agent: env.action_space(agent).sample() for agent in env.agents} # this is where you would insert your policy

observations, rewards, terminations, truncations, infos = env.step(actions)

env.close()

Environments are loaded as ParallelEnv, but can be converted to AECEnv using PettingZoo Wrappers.

Class Description¶

- class shimmy.dm_control_multiagent_compatibility.DmControlMultiAgentCompatibilityV0(env: dm_control.composer.Environment | None = None, team_size: int | None = None, time_limit: float | None = None, disable_walker_contacts: bool | None = None, enable_field_box: bool | None = None, terminate_on_goal: bool | None = None, walker_type: dm_soccer.WalkerType | None = None, render_mode: str | None = None)[source]¶

This compatibility wrapper converts multi-agent dm-control environments, primarily soccer, into a PettingZoo environment.

Dm-control is DeepMind’s software stack for physics-based simulation and Reinforcement Learning environments, using MuJoCo physics. This compatibility wrapper converts a dm-control environment into a gymnasium environment.

Wrapper to convert a dm control multi-agent environment into a PettingZoo environment.

Due to how the underlying environment is set up, this environment is nondeterministic, so seeding does not work.

Note: to wrap an existing environment, only the env and render_mode arguments can be specified. All other arguments (marked [DM CONTROL ARG]) are specific to DM Lab and will be used to load a new environment.

- Parameters:

env (Optional[dm_env.Environment]) – existing dm control multi-agent environment to wrap

team_size (Optional[int]) – number of players for each team [DM CONTROL ARG]

time_limit (Optional[float]) – time limit for the game [DM CONTROL ARG]

disable_walker_contacts (Optional[bool]) – flag to disable walker contacts [DM CONTROL ARG]

enable_field_box (Optional[bool]) – flag to enable field box [DM CONTROL ARG]

terminate_on_goal (Optional[bool]) – flag to terminate the environment on goal [DM CONTROL ARG]

walker_type (Optional[dm_soccer.WalkerType]) – specify walker type (BOXHEAD, ANT, or HUMANOID) [DM CONTROL ARG]

render_mode (Optional[str]) – rendering mode

- metadata: dict[str, Any] = {'name': 'DmControlMultiAgentCompatibilityV0', 'render_modes': ['human']}¶

- observation_space(agent: AgentID) Space[source]¶

observation_space.

Get the observation space from the underlying meltingpot substrate.

- Parameters:

agent (AgentID) – agent

- Returns:

observation_space – spaces.Space

- action_space(agent: AgentID) Space[source]¶

action_space.

Get the action space from the underlying dm-control env.

- Parameters:

agent (AgentID) – agent

- Returns:

action_space – spaces.Space

- render() np.ndarray | None[source]¶

render.

Renders the environment.

- Returns:

The rendering of the environment, depending on the render mode

- reset(seed: int | None = None, options: dict[AgentID, Any] | None = None) tuple[ObsDict, dict[str, Any]][source]¶

reset.

Resets the dm-control environment.

- Parameters:

seed – the seed to reset the environment with

options – the options to reset the environment with (unused)

- Returns:

observations

- step(actions: Dict[AgentID, ActionType]) tuple[Dict[AgentID, ObsType], dict[AgentID, float], dict[AgentID, bool], dict[AgentID, bool], dict[AgentID, Any]][source]¶

step.

Steps through all agents with the actions.

- Parameters:

actions – dict of actions to step through the environment with

- Returns:

(observations, rewards, terminations, truncations, infos)

Utils¶

Utility functions for DM Control Multi-Agent.

- shimmy.utils.dm_control_multiagent.load_dm_control_soccer(team_size: int | None = 2, time_limit: float | None = 10.0, disable_walker_contacts: bool | None = False, enable_field_box: bool | None = True, terminate_on_goal: bool | None = False, walker_type: dm_soccer.WalkerType | None = dm_soccer.WalkerType.BOXHEAD) dm_control.composer.Environment[source]¶

Helper function to load a DM Control Soccer environment.

Handles arguments which are None or unspecified (which will throw errors otherwise).

- Parameters:

team_size (Optional[int]) – number of players for each team

time_limit (Optional[float]) – time limit for the game

disable_walker_contacts (Optional[bool]) – flag to disable walker contacts

enable_field_box (Optional[bool]) – flag to enable field box

terminate_on_goal (Optional[bool]) – flag to terminate the environment on goal

walker_type (Optional[dm_soccer.WalkerType]) – specify walker type (BOXHEAD, ANT, or HUMANOID)

- Returns:

env (dm_control.composer.Environment) – dm control soccer environment