An API conversion tool for reinforcement learning environments.

Shimmy provides Gymnasium and PettingZoo bindings for popular external RL environments.

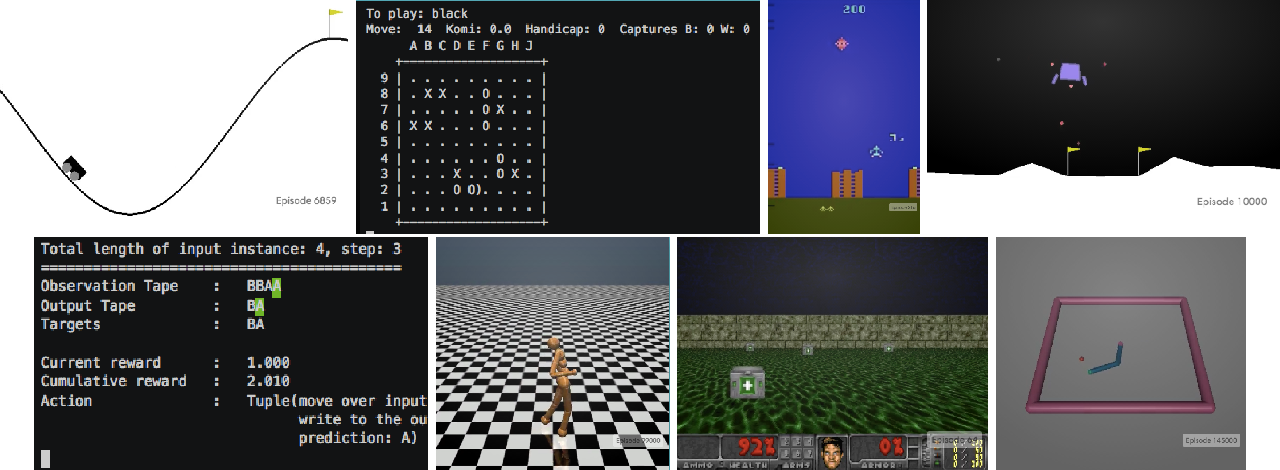

DM Control: 3D physics-based robotics simulation.¶ |

DM Control Soccer: Multi-agent cooperative soccer game.¶ |

DM Lab: 3D navigation and puzzle-solving.¶ |

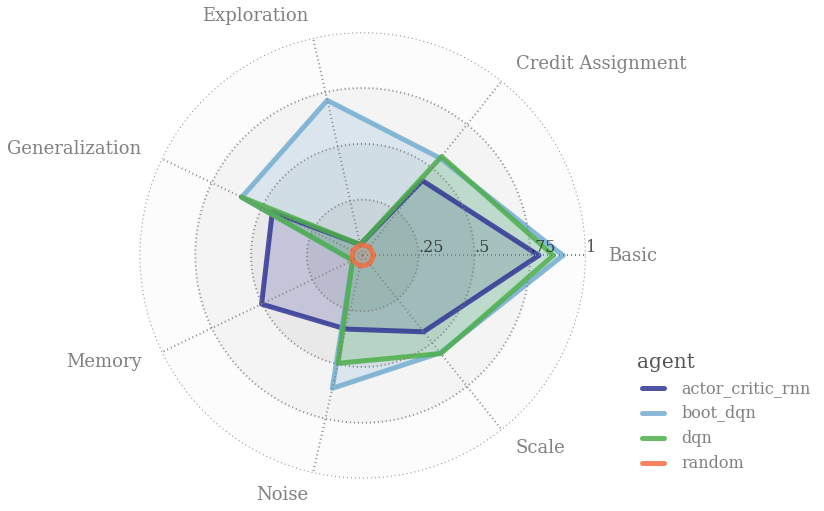

Behavior Suite: Test suite for evaluating model behavior.¶ |

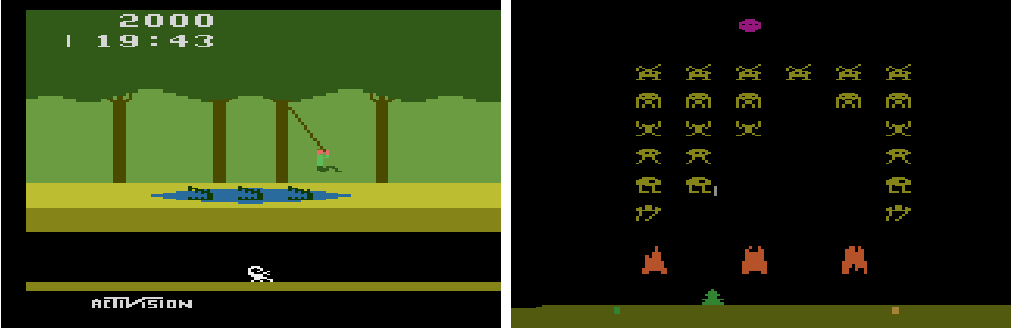

Atari Learning Environment: Set of 50+ classic Atari 2600 games.¶ |

Melting Pot: Multi-agent social reasoning games.¶ |

OpenAI Gym: Compatibility support for Gym V21-V26.¶ |

OpenSpiel: Collection of 70+ board & card game environments.¶ |

Environments can be interacted with using a simple, high-level API:

import gymnasium as gym

env = gym.make("dm_control/acrobot-swingup_sparse-v0", render_mode="human")

observation, info = env.reset(seed=42)

for _ in range(1000):

action = env.action_space.sample() # this is where you would insert your policy

observation, reward, terminated, truncated, info = env.step(action)

if terminated or truncated:

observation, info = env.reset()

env.close()