OpenAI Gym¶

OpenAI Gym¶

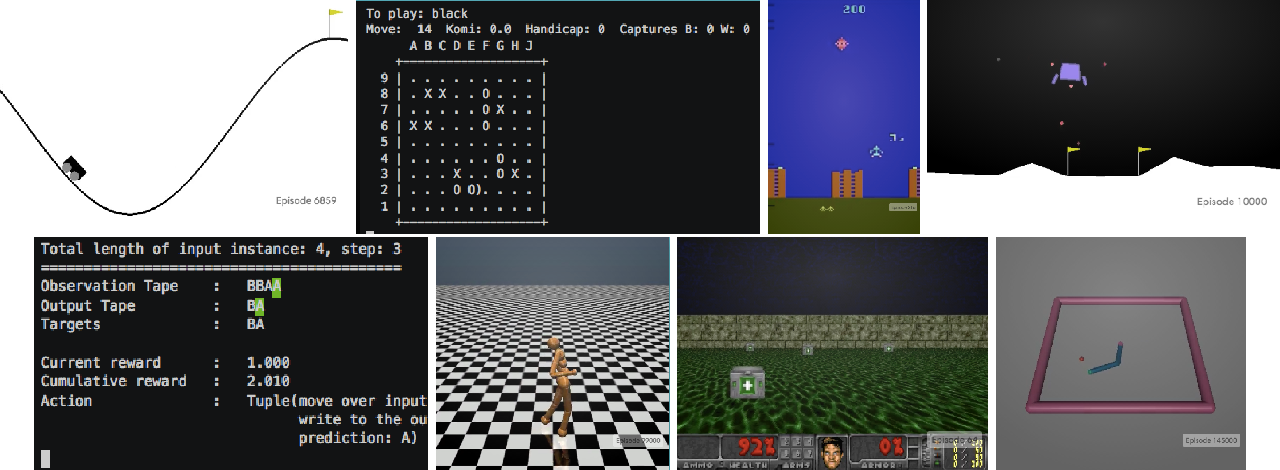

OpenAI Gym is a widely-used standard API for developing reinforcement learning environments and algorithms. OpenAI stopped maintaining Gym in late 2020, leading to the Farama Foundation’s creation of Gymnasium a maintained fork and drop-in replacement for Gym (see blog post).

Shimmy provides compatibility wrappers to convert Gym V26 and V21 environments to Gymnasium.

Installation¶

To install shimmy and required dependencies for Gym V26:

pip install shimmy[gym-v26]

To install shimmy and required dependencies for Gym V21:

pip install shimmy[gym-v21]

Note

For more information about compatibility with Gym, see https://gymnasium.farama.org/content/gym_compatibility/.

Usage¶

Load a Gym V21 environment:

import gymnasium as gym

env = gym.make("GymV21Environment-v0", env_id="CartPole-v1", render_mode="human")

Run the environment:

observation, info = env.reset(seed=42)

for _ in range(1000):

action = env.action_space.sample() # this is where you would insert your policy

observation, reward, terminated, truncated, info = env.step(action)

if terminated or truncated:

observation, info = env.reset()

env.close()

Class Description¶

- class shimmy.openai_gym_compatibility.GymV26CompatibilityV0(env_id: str | None = None, make_kwargs: dict[str, Any] | None = None, env: gym.Env | None = None)[source]¶

This compatibility layer converts a Gym v26 environment to a Gymnasium environment.

Gym is the original open source Python library for developing and comparing reinforcement learning algorithms by providing a standard API to communicate between learning algorithms and environments, as well as a standard set of environments compliant with that API. Since its release, Gym’s API has become the field standard for doing this. In 2022, the team that has been maintaining Gym has moved all future development to Gymnasium.

Converts a gym v26 environment to a gymnasium environment.

Either env_id or env must be passed as arguments.

- Parameters:

env_id – The environment id to use in gym.make

make_kwargs – Additional keyword arguments for make

env – An gym environment to wrap.

- reset(seed: int | None = None, options: dict | None = None) tuple[ObsType, dict][source]¶

Resets the environment.

- Parameters:

seed – the seed to reset the environment with

options – the options to reset the environment with

- Returns:

(observation, info)

- step(action: ActType) tuple[ObsType, float, bool, bool, dict][source]¶

Steps through the environment.

- Parameters:

action – action to step through the environment with

- Returns:

(observation, reward, terminated, truncated, info)

- class shimmy.openai_gym_compatibility.LegacyV21Env(*args, **kwargs)[source]¶

A protocol for OpenAI Gym v0.21 environment.

- observation_space: Space¶

- action_space: Space¶

- class shimmy.openai_gym_compatibility.GymV21CompatibilityV0(env_id: str | None = None, make_kwargs: dict | None = None, env: gym.Env | None = None, render_mode: str | None = None)[source]¶

A wrapper which can transform an environment from the old API to the new API.

Old step API refers to step() method returning (observation, reward, done, info), and reset() only retuning the observation. New step API refers to step() method returning (observation, reward, terminated, truncated, info) and reset() returning (observation, info). (Refer to docs for details on the API change)

Known limitations: - Environments that use self.np_random might not work as expected.

A wrapper which converts old-style envs to valid modern envs.

Some information may be lost in the conversion, so we recommend updating your environment.

- reset(seed: int | None = None, options: dict | None = None) tuple[ObsType, dict][source]¶

Resets the environment.

- Parameters:

seed – the seed to reset the environment with

options – the options to reset the environment with

- Returns:

(observation, info)

- step(action: ActType) tuple[Any, float, bool, bool, dict][source]¶

Steps through the environment.

- Parameters:

action – action to step through the environment with

- Returns:

(observation, reward, terminated, truncated, info)